A data lakehouse is a data repository that combines the scalability, cost-effectiveness, and flexibility of a data lake with the governance and query capabilities of a data warehouse. Using open table formats and data catalogs, data lakehouses offer the SQL-based functionality of data warehouses while ensuring visibility and control into structured data.

With these combined capabilities, the data lakehouse can support a wide range of analytical and operational uses of data, ranging from reporting and business intelligence to generative and agentic AI.

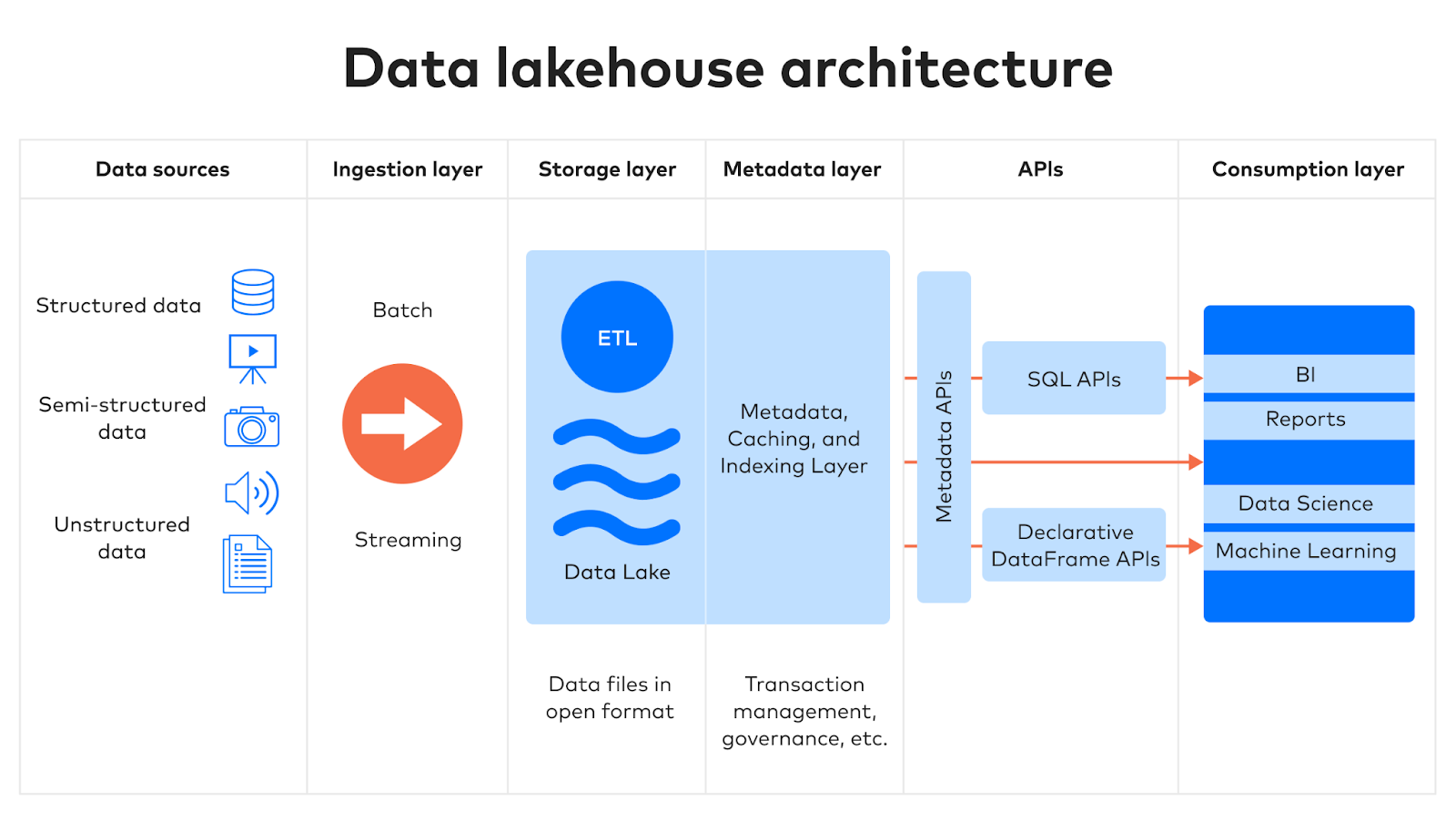

Data lakehouse architecture

The data lakehouse architecture consists of five layers.

1. Ingestion

In the first layer, data is collected and delivered to the storage layer from sources such as:

- Databases, including both relational database management systems (RDBMSs) and semi-structured NoSQL databases

- Enterprise resource planning (ERP), customer relationship management (CRM), and other Software-as-a-Service (SaaS) applications

- Event streams

- Files, including unstructured data — documents, videos, audio, and other media — of all kinds

The Fivetran Managed Data Lake Service is purpose-designed to facilitate this step. In addition to moving data from source to destination, the Fivetran pipeline normalizes, compacts, and deduplicates data while converting it to an open table format.

2. Storage

Like conventional data lakes, data lakehouses offer scalability and flexibility, decoupling storage from compute. Data lakehouses can store structured and semi-structured data in open table formats, and unstructured data in raw files. Open table formats also enable users to implement features such as ACID transactions, indexing, caching, and data versioning.

3. Metadata

Metadata contains information about other pieces of data. A data lakehouse’s metadata layer is the key advantage it has over ungoverned data lakes. Technical data catalogs can document every object in the lake storage. For structured and semi-structured data in particular, open table formats store metadata, like version history and schemas, in files that can either be accessed directly or using a technical data catalog.

Unlike conventional data lakes that often become “data swamps,” the data lakehouse enables data teams to more easily observe and control, i.e., govern, the contents of the lakehouse. Auditing and data governance can be done directly on the data lake, enhancing data integrity and building trust in the data and its derivative products.

Since the metadata layer represents all the contents of the storage layer, users can use transformations to implement data warehouse schema architectures, like snowflake or star schemas, while tracking that schema changes and lineage.

4. Query engine/API

Query engines enable data professionals to query and transform data in the data lakehouse as needed using SQL. Since data lakehouses decouple storage and compute, data lakehouses can be paired with query engines optimized for different analytical and operational workloads, including reporting, machine learning, generative AI, streaming, and automation. Different teams may access the same data lakehouse using query engines optimized for their workloads.

Query engines usually also include DataFrame APIs, enabling analysts, data scientists, and other users to access and query data using languages like Python, R, Scala, and others for the complex manipulations of data characteristic of machine learning and AI.

5. Consumption

Business intelligence platforms, data science applications, and AI architectures sit in the consumption layer, drawing transformed, analytics-ready data from the lake via the query engine or DataFrame API to produce reports, dashboards, and data products, like machine learning and artificial intelligence models, of all kinds.

Key features and advantages of a data lakehouse

By combining the capabilities of both data lakes and data warehouses, the data lakehouse offers the following key features:

- ACID transactions support: Data lakehouses enable ACID transactions, normally associated with data warehouses, to ensure consistency as multiple parties concurrently read and write data.

- BI support: With ACID support and SQL query engines, analysts can directly connect data lakehouses with business intelligence platforms.

- Open storage formats: Data lakehouses use open storage formats, which a data team can combine with their compute engine of choice. You’re not locked into one vendor’s monolithic data analytics architecture.

- Schema and governance capabilities: Data lakehouses support schema-on-read, in which the software accessing the data determines its structure on the fly. A data lakehouse supports schemas for structured data and implements schema enforcement to ensure that data uploaded to a table matches the schema.

- Support for diverse data types and workloads: Data lakehouses can hold both structured and unstructured data, so aside from handling relational data, you can use them to store, transform, and analyze images, video, audi,o and text, as well as semi-structured data like JSON files.

- Decoupled storage and compute: Storage resources are decoupled from compute resources. This provides the modularity to control costs and scale either one separately to meet the needs of your workloads, whether for AI, machine learning, business intelligence, or data science.

These features offer data teams the following benefits and advantages:

- Scalability: Data lakehouses are underpinned by commodity cloud storage, allowing cheap storage of very high volumes.

- Improved data management: By storing diverse data, data lakehouses can support all data use cases, ranging from reporting to predictive modeling to generative AI.

- Streamlined data architecture: The data lakehouse simplifies data architecture by eliminating the need to use a data lake as a staging area for a data warehouse, as well as eliminating the need to maintain a separate data warehouse for analytics and a data lake for streaming operations.

- Lower costs: The streamlined architecture also lowers costs.

- Eliminate redundant data: Since data is unified in a lakehouse, redundant copies of data are no longer necessary. This reduces storage requirements and simplifies governance.

Data lakehouses have a bright future

The data lakehouse was first announced by Databricks in 2017, eventually becoming an open-source project aimed at bringing reliability to data lakes. In the years since, other major cloud platform providers have begun to offer the same architecture, and it is rapidly becoming a common standard for data storage.

Data lakehouses are ideal for the following use cases:

- An organization’s data needs are anticipated to continue growing in scale, volume, and complexity, making the cost advantages and flexibility offered by using a data lake for storage more meaningful.

- An organization wants to simplify its data architecture and eliminate redundancies for cost control and better governance.

- Advanced analytics like generative AI benefit from having all of an organization’s data in one place, with the ability to support both structured and unstructured data.

However, setting up a data lakehouse requires not only extracting and loading data into a data lake in an open table format, but also maintaining it, which involves tasks such as cleaning, deduplicating, compacting, partitioning, and clustering data. These tasks require considerable engineering overhead and specialized expertise.

The Fivetran Managed Data Lake Service automates these activities, making data integration and data movement into the data lakehouse simple and reliable and freeing up your valuable engineering time. Sign up today for a free trial.