The enterprise AI boom is here. Companies everywhere are piloting LLM copilots, experimenting with agentic workflows, and racing to infuse intelligence into every corner of the business. From the outside, it looks like a transformation. But under the hood, most of these efforts are built on a shaky foundation: legacy pipelines, brittle integrations, and data that’s inconsistent, incomplete, or out of date.

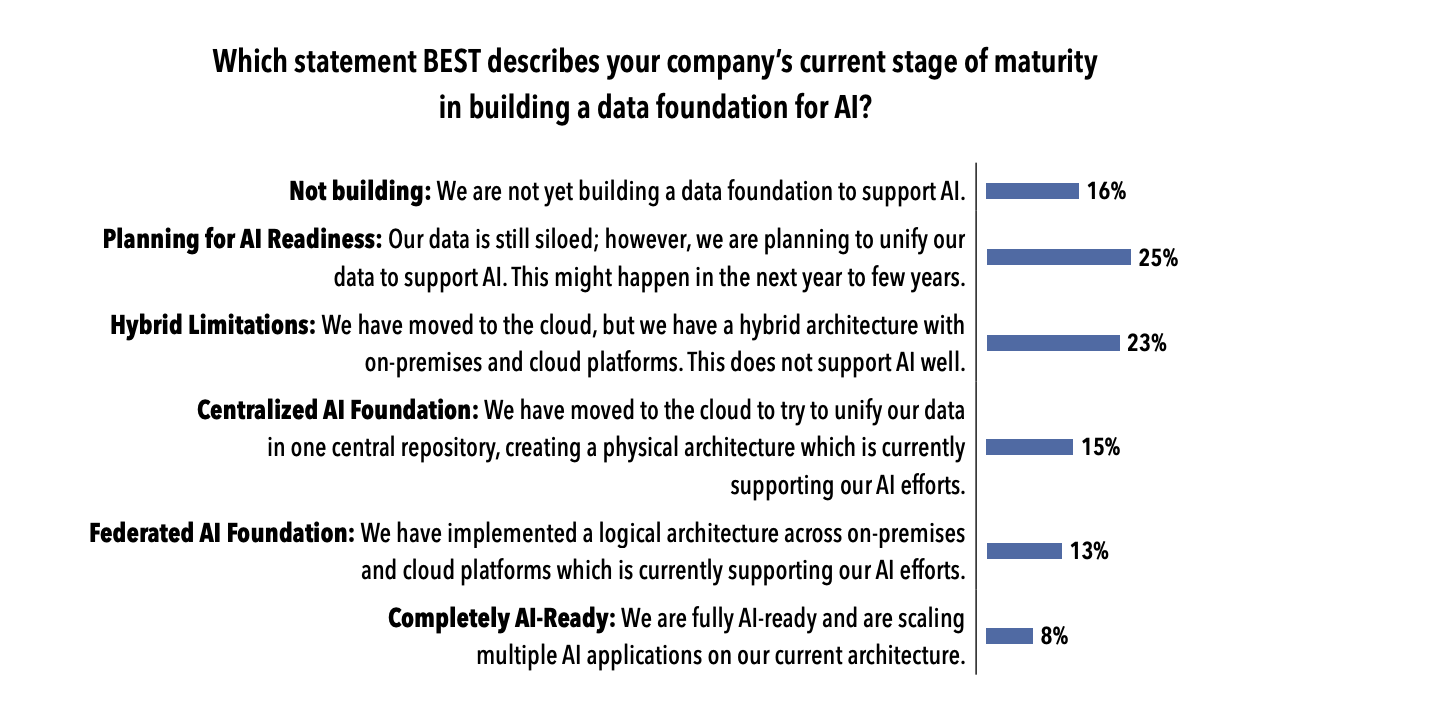

According to the latest TDWI Data Points Report: The data foundation for AI, only 7.6% of organizations are truly AI-ready, with a data architecture capable of scaling multiple AI applications. The rest are struggling to centralize, observe, and govern their data, with engineering teams consumed by building and maintaining infrastructure rather than creating new data products and new value.

And yet, the pressure to implement AI initiatives hasn’t let up. Over 80% of companies are already using or experimenting with AI. But only 15% are combining generative AI with their internal data, which is where the real long-term value lies. Instead, many are experimenting with off-the-shelf models and consumer-grade tools, often operating outside of IT’s purview. This creates “shadow AI”: projects that aren’t scalable, governed, or production-ready. The upshot of using AI without supporting infrastructure or proprietary data is bad AI — hallucinating models, misleading outputs, and eroding user trust.

The infrastructure barrier to scalable AI

“AI readiness” is predicated on the ability to deploy and scale multiple AI applications that leverage an organization’s proprietary data. Beyond proof-of-concept models, it means a scalable, governed data architecture with real-time access, resilient pipelines, and support for both structured and unstructured data. It means pipelines that adapt to schema changes and support for AI workloads for every team, not just one department.

The key obstacle to AI readiness stems from engineering-intensive data integration activities that should be automated. According to TDWI, more than two-thirds of organizations say their engineers spend over 25% of their time maintaining ETL/ELT pipelines. That’s a huge drain on productivity and a serious bottleneck for innovation.

Instead of building AI products, technical talent is stuck babysitting brittle pipelines. Add to that widespread issues with data standardization (32%), quality (31%), and hybrid cloud integration (27%), and it’s no wonder AI initiatives often stall out or stay stuck in isolated pilot mode.

How to achieve AI readiness

AI readiness isn’t just about adopting the latest models — it’s about creating the right data foundation to support them at scale. To be truly AI-ready, organizations must move beyond reactive, manual processes and build a data architecture that is governed, automated, and built for real-time intelligence.

Here’s what that looks like in practice:

- Automate data movement from every corner of your business — SaaS apps, legacy databases, and on-prem systems — using reliable, fully managed pipelines.

- Standardize and clean your data at the source to ensure accuracy, consistency, and trust, so your AI models don’t train on bad inputs.

- Unify structured and unstructured data into a single analytics platform that supports advanced AI workloads across teams, not just isolated departments.

- Design for agility and scale, with pipelines that adapt automatically to schema changes and infrastructure that supports real-time replication.

- Eliminate pipeline maintenance burdens so your engineers can focus on high-impact initiatives like building AI-powered features and data products.

At Fivetran, we’re building that foundation. With over 700 prebuilt connectors, automated schema handling, and high-performance replication into Microsoft Fabric, OneLake, Synapse, and more, we help companies eliminate the grunt work of data movement so they can focus on what really matters: building smarter, faster, AI-enabled businesses.

[CTA_MODULE]