Top 16 data integration tools and what you need to know

Modern businesses generate constant streams of data from hundreds of sources, such as SaaS applications, IoT devices, streaming platforms, and legacy systems. Integrating this data into cloud data warehouses and data lakes is crucial for centralized, real-time analytics.

This article will help you choose the right tool for your business. We examine 16 leading data integration solutions across 5 key categories using modern selection criteria and considering emerging trends.

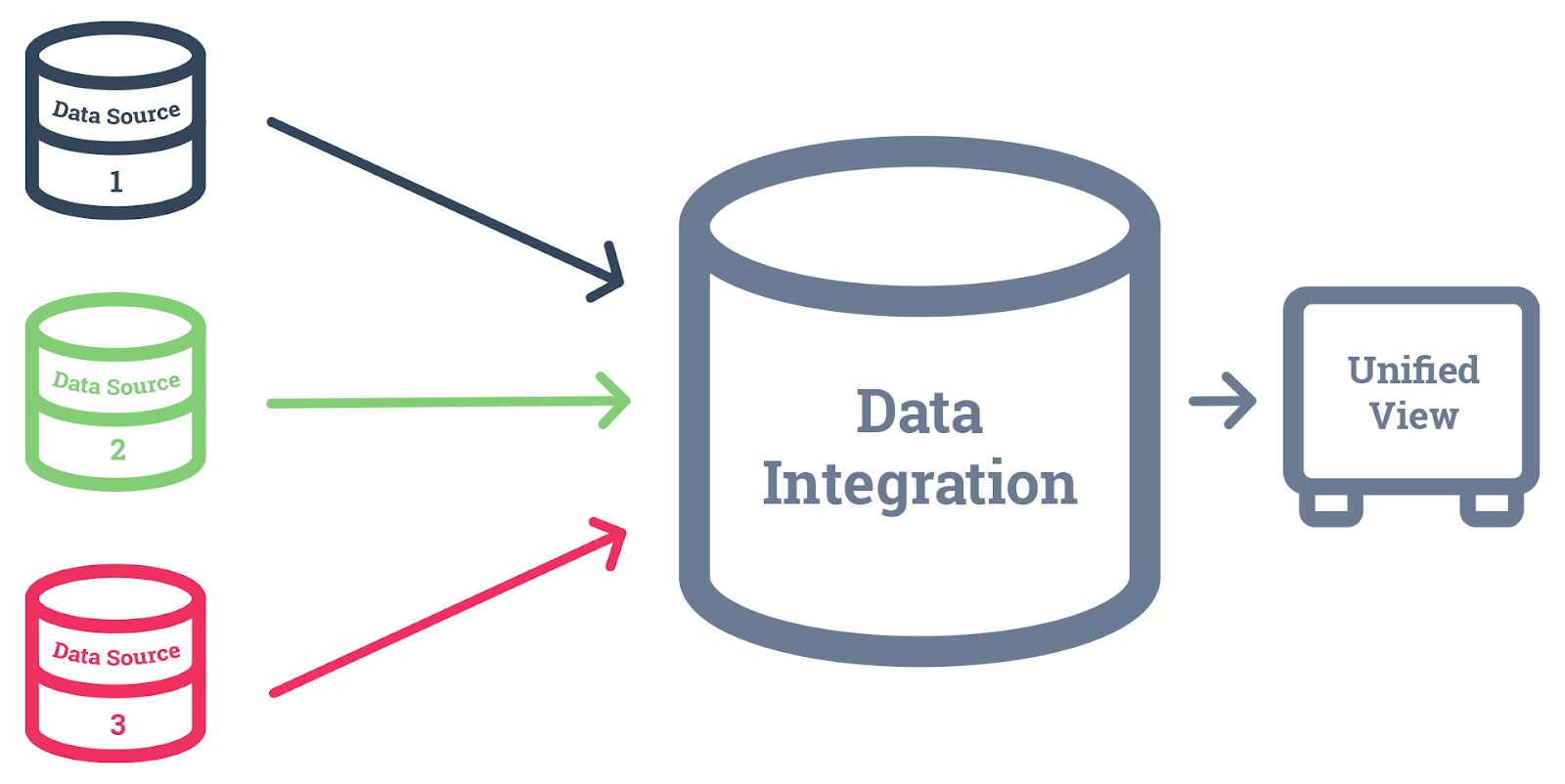

What are data integration tools?

Data integration tools are software applications that combine data from diverse sources into a single, centralized location. The tools manage the process of ingesting, cleansing, transforming, and moving data to a destination where it can be analyzed, like a data warehouse.

However, achieving effective integration involves overcoming several challenges, including ensuring data quality, managing large data volumes, maintaining security, and ensuring scalability. Most modern solutions address these issues with features and capabilities for:

- Data cleansing and governance: To ensure data is accurate, secure, and reliable.

- Master data management: To create a single source of truth through standardized definitions.

- Data ingestion and connectors: To gather, import, and move data between systems (e.g., via ETL).

- Data catalogs and migration: To find, inventory, and move data assets across the organization.

Top 16 data integration tools

The right data integration tool depends on your specific business needs, your current infrastructure, and the expertise of your team. To simplify the selection process, the solutions below are split into the following categories:

Cloud-native integration tools

Favored for their speed, simplicity, and extensive connector libraries, there are several solid cloud-native integration solutions. The cloud-native tools options in this category are:

Airbyte

Airbyte is an open-source data movement platform designed for ELT workflows. It is highly adaptable to diverse data sources and offers extensibility by enabling custom connectors to be built for unsupported sources.

A key feature is the Connector Development Kit (CDK), which allows developers to build custom connectors for unsupported sources. The platform is adapting to modern data needs, with features supporting AI use cases through integrations with vector databases and the ability to handle unstructured data.

Noteworthy features:

- Open-source flexibility: Airbyte's open-source nature provides flexibility and cost-effectiveness for self-hosted deployments.

- Connector quality: Connector quality can vary, requiring thorough testing for critical pipelines.

- Community support: For organizations with internal expertise seeking full control, Airbyte offers a community-driven alternative.

- Use case: You need open-source connectors to move data between cloud tools and cannot justify a paid platform yet.

Pricing: Free for self-hosted, consumption-based for cloud, and capacity-based for enterprise plans.

Fivetran

Fivetran is a fully managed, cloud-native data integration platform that automates the ELT process. It’s known for its reliability, ease of use, and extensive library of 700+ pre-built connectors.

The automated schema drift handling feature adapts to changes in source schemas and minimizes manual intervention. The platform is low-code and offers a native dbt Core integration, allowing teams to orchestrate data transformation jobs within the Fivetran environment.

Noteworthy features:

- Low maintenance: Fivetran’s primary value is reducing engineering and maintenance overhead. Schema changes and API shifts are auto-handled, making it a strong solution for teams prioritizing reliability and automation over granular control.

- Pricing predictability: Its consumption-based pricing, using ‘monthly active rows’ (MARs), can become unpredictable and expensive with high data volumes.

- Transformation capabilities: While Fivetran is effective for data extraction and loading, its transformation capabilities are limited.

- Use case: You want software as a service (SaaS) data in your warehouse without building or maintaining pipelines.

Pricing: Consumption-based (MARs), with free and paid plans scaling with usage.

Matillion

Matillion is a data integration platform designed explicitly for cloud data environments. It focuses on the "T" (Transform) in both ELT and extract, transform, load (ETL). It offers a visual, low-code interface to build complex data transformation jobs that execute directly within a user’s cloud data warehouse.

This approach utilizes the native processing power of the underlying platform, such as Snowflake, Databricks, Redshift, or BigQuery. Matillion also offers features for data orchestration and version control.

Noteworthy features:

- Deep cloud data warehouse integration: Matillion is designed for deep integration with central cloud data warehouses, leading to high-performance transformations.

- Visual interface: Its visual interface makes it accessible to a broader user base.

- Source connector library: Its source connector library is smaller than that of purely ELT platforms.

- Cost model: The compute-driven cost model, based on ‘Matillion Credits,’ can be expensive for frequent jobs.

- Use case: You need to transform data inside Snowflake or Redshift and prefer a visual user interface (UI) over writing code.

Pricing: Usage-based, consuming 'Matillion Credits' based on compute (vCore) usage over time, with various tiers available.

Enterprise-grade integration Platforms

These tools offer comprehensive and highly scalable solutions designed for large, complex organizations. They provide verifiable governance, security, and support for a vast range of data sources.

IBM DataStage

IBM DataStage is an enterprise-grade data integration tool designed for complex extract, transform, load (ETL) and ELT jobs. Its core strength lies in a high-performance parallel processing engine that can more efficiently handle large data volumes.

It’s offered as a service within the IBM Cloud Pak for Data, a unified data and artificial intelligence (AI) platform that supports deployment across cloud and on-premises environments. DataStage is proficient in intricate data transformations within legacy enterprise sources like mainframes.

Noteworthy features:

- Complexity and resource intensity: While a workhorse for large-scale data movement, DataStage requires significant technical expertise for initial setup and ongoing maintenance.

- Legacy system integration: A major advantage for established enterprises with diverse data ecosystems is its strength in connecting to legacy systems.

- User interface: Compared to newer, more agile cloud-native solutions, its user interface and development experience can feel dated.

- Cost: The licensing model, based on Virtual Processor Cores (VPCs), can be a significant investment, making it more suitable for large enterprises with substantial budgets.

- Reliability versus debugging: Users highlight its reliability and ability to handle mission-critical workloads, but also point to challenges in debugging and managing parameters in complex job deployments.

- Use case: You are managing legacy systems and need stable, governed batch ETL at scale.

Pricing: Primarily based on VPCs per month within Cloud Pak for Data, with software as a service (SaaS) and custom enterprise options available.

Informatica

Informatica provides an enterprise-grade data management platform known as the Intelligent Data Management Cloud (IDMC). It offers a tightly integrated set of tools covering the entire data lifecycle. This includes data integration (ETL, ELT), application integration (integration platform as a service, or iPaaS), data quality, data governance, and master data management (MDM). The IDMC is powered by an AI engine called CLAIRE, which automates tasks including:

- Discovery

- Mapping

- Cleansing

It can also handle high-volume, mission-critical workloads across on-premises, cloud, and hybrid infrastructures.

Noteworthy features:

- Comprehensive suite: Informatica’s main strength is its all-in-one approach to data management, making it a good choice for organizations with diverse data needs that prefer a single vendor solution.

- Scalability and reliability: The platform’s enterprise-grade scalability and reliability are consistently praised by users.

- Setup and implementation: Advanced features come with a learning curve.

- Automation versus manual effort: While the CLAIRE AI engine aims to automate tasks, users often report that initial setup and configuration require considerable manual effort and specialized skills.

- Use case: You need deep metadata tracking, data governance, and compliance across complex environments.

Pricing: Consumption-based, primarily using “Informatica Processing Units” (IPUs), with pay-as-you-go, subscription tiers, and custom enterprise options available.

Oracle Data Integrator

Oracle Data Integrator (ODI) is an ELT-focused data integration tool, now primarily offered as part of the Oracle Cloud Infrastructure (OCI) Data Integration service. While the on-premises version of ODI still exists, Oracle’s strategic focus and new feature development are centered on its cloud offering.

ODI’s ELT architecture pushes transformations down to the underlying source or target database, thereby utilizing the database's native processing power. A key distinguishing feature of ODI has always been its “Knowledge Modules” (KMs), which are reusable code templates that make the platform highly extensible and adaptable to various technologies and integration patterns.

Noteworthy features:

- Oracle ecosystem optimization: ODI’s primary advantage lies in its deep optimization for the Oracle ecosystem.

- Extensibility: The platform is highly extensible and adaptable to various technologies and integration patterns through its “Knowledge Modules” (KMs).

- Learning curve: ODI can be difficult to master, particularly for those unfamiliar with Oracle’s specific methodologies and the concept of KMs.

- Connectivity: While strong within the Oracle ecosystem, connectivity to non-Oracle sources can require more configuration compared to other enterprise-grade tools.

- Cost: The cost, encompassing both licensing for on-premises versions and consumption-based pricing for OCI, can be substantial, aligning with its enterprise-grade capabilities.

Pricing: For on-premises (Legacy ODI), pricing is licensed per processor core. The OCI Data Integration Service is pay-as-you-go based on “Workspace hours” and “Data Processor hours,” with a free tier available.

Developer-Focused Tools

These platforms enable data engineers and developers to build, test, and deploy custom data pipelines with code. They offer flexibility, version control, and deep integration with software development workflows.

dbt Cloud

dbt (data build tool) is a SQL-centric data transformation framework that applies software engineering principles to data modeling within cloud data warehouses. It helps build reliable, tested data models using SQL SELECT statements.

While dbt Cloud offers a managed service with an integrated development environment (IDE) and scheduling, dbt is exclusively a transformation tool. This means you’ll need external solutions for data extraction and loading.

Its SQL-based approach can be limiting for complex procedural logic, and the native scheduler may lack advanced orchestration capabilities.

Noteworthy features:

- DataOps-as-code: Offers complete control and visibility over pipelines, promoting reproducibility and avoiding vendor lock-in.

- High technical barrier: Requires strong engineering skills for effective setup and management.

- Connector quality: Quality and maturity of connectors can vary within the Singer ecosystem.

- Community-driven support: Primarily relies on community support, which may not suit all organizations.

Pricing: Open-source and free to use. Paid tiers for Meltano Cloud offer managed services and additional features.

Meltano

Meltano is an open-source DataOps platform for building, orchestrating, and managing data pipelines. It acts as an integration and orchestration layer, wrapping tools like Singer for EL and dbt for T.

This enables defining ELT pipelines within version-controlled project files, fostering collaboration and reproducibility. Its command-line interface (CLI)-first design appeals to developers. While useful for custom pipelines, it may not be optimal for simpler, out-of-the-box integration needs.

Noteworthy features:

- DataOps-as-code: Offers complete control and visibility over pipelines, promoting reproducibility and avoiding vendor lock-in.

- High technical barrier: Requires strong engineering skills for effective setup and management.

- Connector quality: Quality and maturity of connectors can vary within the Singer ecosystem.

- Community-driven support: Primarily relies on community support, which may not suit all organizations.

- Use case: You want open-source ELT pipelines with Git-based workflows and full control.

Pricing: Open-source and free to use. Paid tiers for Meltano Cloud offer managed services and additional features.

Prefect

Prefect is a workflow orchestration tool for building and monitoring data pipelines, which is defined as Python code. It is well-suited for complex, dynamic workflows and a range of data engineering tasks, including machine learning pipelines.

The Python-native approach is a significant advantage for developers already in that ecosystem. Prefect offers both an open-source framework and a managed cloud service, providing deployment flexibility.

Noteworthy features:

- Code-centric orchestration: Defines workflows as Python code, offering flexibility and control for complex data pipelines.

- Resilience: Emphasizes retries, caching, and logging for strong pipelines.

- Orchestration-only: Primarily an orchestration tool; requires integration with other tools for data extraction, loading, and transformation.

- Self-hosted complexity: Setting up and managing a self-hosted Prefect server can be complex.

- Learning curve: Steep learning curve for those new to workflow orchestration or Python-based data engineering.

Pricing: Open-source Prefect Core is free. Prefect Cloud offers a free tier and paid plans based on usage (e.g., number of runs, tasks, and users).

Integration Platform as a Service (iPaaS)

iPaaS solutions go beyond data integration to connect applications and automate workflows across an entire organization. They typically feature low-code/no-code interfaces for building complex integrations quickly.

Boomi

Boomi is a comprehensive, cloud-native Integration Platform as a Service (iPaaS) providing a unified platform for application integration, data integration, Electronic Data Interchange (EDI), Application Programming Interface (API) management, and workflow automation. It features a low-code, visual interface to connect Software as a Service (SaaS) applications, on-premises systems, APIs, and EDI networks.

Its architecture supports hybrid environments, allowing for smooth connectivity between cloud and on-premises systems. Boomi is recognized for its scalability and ability to handle a wide range of integration patterns — everything from simple data synchronization to complex enterprise application integration.

Noteworthy features:

- Unified platform: Offers a comprehensive suite of integration capabilities within a single, cloud-native platform.

- Low-code/no-code: Accelerates development with an intuitive visual interface and pre-built connectors.

- Hybrid environment support: Seamlessly connects cloud and on-premises systems.

- Scalability: Designed to handle diverse integration patterns and high volumes of data.

- Learning curve: While low-code, mastering its full capabilities and managing complex configurations can be complicated.

- Cost: Pricing can be substantial, especially for large-scale enterprise deployments with extensive usage.

- Monitoring and debugging: Some users report challenges with monitoring and debugging complex integrations, requiring a deeper understanding of the platform.

- Use case: You need to connect cloud and on-prem systems quickly and don’t want to build custom APIs.

Pricing: Typically subscription-based, with pricing varying based on connectors, data volume, and features. Contact sales for detailed quotes.

Jitterbit

Jitterbit simplifies and accelerates the connection of applications, data, and APIs. Its Harmony platform handles many integration scenarios, including application-to-application, data integration, and full API lifecycle management. It emphasizes speed-to-deployment through an extensive library of pre-built "recipes" and process templates for everyday use cases. It incorporates Artificial Intelligence (AI)-based features for tasks like data mapping.

Noteworthy features:

- Rapid implementation: Achieved via templates.

- Unified API/integration platform: Provides a single environment for API and integration management.

- User-friendly interface: Features a low-code interface.

- Flexible deployment options: Supports various deployment scenarios.

- High-volume transformation limitations: May not be suitable for very large data volumes.

- Steep learning curve: For advanced features.

- Considerable investment: Can be a significant cost.

- Use case: You’re manually moving data between systems and need automation without heavy dev work.

Pricing: Tier-based and quote-driven (Standard, Professional, Enterprise). A 30-day free trial is available.

SnapLogic

SnapLogic provides a unified solution for application integration, data integration, and API management. Its core components are "Snaps," pre-built, modular connectors for applications, data sources, and transformations. Users build pipelines by visually dragging and dropping these Snaps. The platform is known for its Artificial Intelligence (AI)-powered assistant, Iris, which offers suggestions for pipeline development. SnapLogic focuses on enabling self-service while maintaining centralized governance and security.

Noteworthy features:

- Ease of use: Self-service for diverse users.

- Broad connectivity: Via an extensive library of Snaps.

- Unified platform: For various use cases.

- AI-assisted development: Iris provides suggestions for pipeline development.

- Significant cost: Can be expensive.

- Performance: For very large data volumes, the performance of specialized Extract, Transform, Load (ETL)/Extract, Load, Transform (ELT) tools may not match that of standard tools.

- Advanced customization: Can be less flexible.

- Use case: You’re handling complex data flows and want a scalable, visual integration tool.

Pricing: Not public and highly customized, based on factors like users, data volume, and number of integration endpoints (Snaps). A 30-day free trial is available.

iPaaS Tools Comparison

Analytics-Focused Platforms

These tools combine data integration with advanced analytics and business intelligence. They are designed to consolidate data and prepare, analyze, and visualize it within a single environment.

Databricks

Databricks is a unified data and Artificial Intelligence (AI) platform built around Apache Spark. It pioneered the "data lakehouse" paradigm, combining the scalability and low cost of a data lake with the performance and reliability of a data warehouse. The platform provides a collaborative environment for data engineers, data scientists, and analysts. It handles large-scale data processing (Extract, Transform, Load (ETL)/Extract, Load, Transform (ELT)), business intelligence, and end-to-end machine learning (ML) workflows.

Noteworthy features:

- Unified platform: Reduces tool sprawl, offering excellent performance and scalability.

- Strong ML and AI integration: Provides comprehensive capabilities for advanced analytics.

- Open architecture: Based on open-source technologies like Delta Lake.

- Complex pricing model: Databricks Unit (DBU)-based pricing can be challenging to predict.

- Spark expertise required: Optimization necessitates familiarity with Spark.

- Cost for continuous workloads: Can be expensive for ongoing, high-volume operations.

- Use case: You’re processing large datasets or building ML models and need collaborative notebooks.

Pricing: Consumption-based, billing per "Databricks Unit" (DBU). Cost per DBU varies by cloud provider, region, and compute tier (e.g., Jobs Compute, SQL Compute, All-Purpose Compute).

Qlik (including Talend and Stitch)

Following its acquisition of Talend (which had previously acquired Stitch), Qlik now offers an integrated platform covering the entire data lifecycle. The portfolio combines Stitch for simple, automated Extract, Load, Transform (ELT); Talend for complex, enterprise-grade data integration, quality, and governance; and Qlik for advanced, real-time data replication and analytics.

The goal is to provide a unified "data fabric" handling everything from simple data movement to sophisticated transformations and business intelligence.

Noteworthy features:

- End-to-end solution: Offers multi-speed integration capabilities.

- Strong data quality and governance: Utilizes Talend for secure data management.

- Powerful analytics engine: Provides advanced business intelligence features.

- Complex integration: Integration between components can be challenging.

- Overkill for simpler needs: May be too comprehensive for basic integration requirements.

- Use case: You need integrated data pipelines and dashboards for business users.

Pricing: Modular and complex. Qlik Analytics (e.g., Qlik Sense Business) is per-user. Talend Cloud is per-user/quote-based. Stitch is consumption-based. Enterprise agreements bundle components.

Pentaho (by Hitachi Vantara)

Pentaho is a Business Intelligence (BI) and data integration platform owned by Hitachi Vantara. Its data integration component, Pentaho Data Integration (PDI), is a mature and capable Extract, Transform, Load (ETL) tool.

The platform bundles ETL capabilities with a suite of BI tools for reporting, dashboarding, and data visualization. It is available as a free and open-source community edition and a commercially supported enterprise edition.

Noteworthy features:

- All-in-one platform: Combines ETL and BI capabilities.

- Cost-effective: Offers an open-source option.

- Visual workflow designer: Provides an intuitive interface.

- Extensible: Allows for customization and expansion.

- Dated user interface: The User Interface (UI) can feel old-fashioned.

- Performance challenges: May struggle with very large data volumes.

- Less optimized for cloud: Not as efficient with modern cloud data warehouses.

- Use case: You’re modernizing legacy BI without replacing everything at once.

Pricing: Community Edition is free (open-source). Enterprise Edition is quote-based and typically a subscription model based on usage and support.

Snowflake

Snowflake is a cloud data platform that has evolved from a data warehouse to a comprehensive "Data Cloud." While primarily a data destination, it offers effective built-in data integration features.

These include Snowpipe for continuous data ingestion and Snowpark, which allows developers to write complex data transformations (Extract, Transform, Load (ETL)/Extract, Load, Transform (ELT)) in languages like Python, Java, and Scala that execute directly within the platform. This reduces the need for separate data processing engines.

Noteworthy features:

- Separated storage and compute: This architecture allows for independent scaling of resources, optimizing cost and performance.

- Cloud agnostic: Runs on Amazon Web Services (AWS), Azure, and Google Cloud, providing flexibility and avoiding vendor lock-in to a single cloud provider.

- Data sharing capabilities: Its Data Cloud enables secure and controlled data sharing with external parties without data movement.

- Consumption-based pricing: A pay-as-you-go model can be cost-effective for fluctuating workloads but requires careful monitoring to avoid unexpected costs.

- SQL-centric: Primarily Structured Query Language (SQL)-based, which is accessible to many data professionals but may limit complex procedural logic.

- Vendor lock-in concerns: While cloud-agnostic, deep integration with Snowflake features can lead to a degree of platform lock-in.

- Performance optimization: Requires understanding of virtual warehouses and query optimization for efficient performance and cost management.

- Use case: You need a scalable cloud warehouse that handles structured and semi-structured data easily.

Pricing: Usage-based, billed per second for compute, and a flat rate for storage. Costs are influenced by data storage, virtual warehouse usage (compute), and data transfer. Various editions (Standard, Enterprise, Business Critical, Virtual Private Snowflake) offer different features and pricing tiers.

How to choose the right data integration tool

When it comes to selecting the right data integration tool for your business, you should begin with a clear understanding of your requirements, technical capabilities, and budget. The goal is to find the platform that best fits your specific use case, and not necessarily the one with the most features. The evaluation process can be broken down into these key areas:

- Data sources and destinations: This is critical. Make a comprehensive list of all your current and future data sources (e.g., SaaS apps, databases, ERP systems) and destinations (e.g., data warehouses, data lakes), and ensure the tool has reliable, easy-to-use connectors for each.

- Data volume and transfer frequency: Work out how much data you need to process and how often it needs to be updated. This will determine whether you need a tool capable of handling large volumes and near real-time synchronization.

- Data quality and transformations: Your chosen tool should support your data quality requirements, helping to cleanse and prepare data before it reaches its destination. It should also be flexible enough to handle any necessary data transformations.

- Deployment and cloud support: It should also operate seamlessly within your existing infrastructure, whether it's on-premises, in the cloud, or a hybrid environment.

- Pricing and total cost of ownership: Pricing models vary significantly between open-source, SaaS, and enterprise solutions. Consider not only the licensing or subscription fees but also the hidden costs of implementation, maintenance, and the internal technical resources required.

- Support: Ensure the vendor provides a level of support commensurate with your needs and your team's technical abilities. Comprehensive documentation and tutorials are a major plus.

Move toward intelligent, automated integration with Fivetran

Modern data integration is defined by three key trends: embedded connectivity, AI-driven automation, and composable architectures. These advances demand solutions that deliver clean, reliable data.

Whether you're automating ELT pipelines, orchestrating custom workflows, or powering BI dashboards, choosing the right tool depends on your architecture, use case, and the level of control your team needs.

[CTA_MODULE]

Related posts

Start for free

Join the thousands of companies using Fivetran to centralize and transform their data.