In today’s business landscape, making smarter decisions faster is a critical competitive advantage. But harnessing timely insights from your company’s data can seem like a headache-inducing challenge. The volume of data — and data sources — is growing every day: on-premise solutions, SaaS applications, databases and other external data sources. How do you bring the data from all of these disparate sources together? Data pipelines.

What is a data pipeline?

A data pipeline is a set of actions and technologies that route raw data from a source to a destination. Data pipelines are sometimes called data connectors.

Data pipelines consist of three components: a source, a data transformation step, and a destination.

- A data source might include an internal database such as a production transactional database powered by MongoDB or PostgreSQL; a cloud platform such as Salesforce, Shopify, or MailChimp; or an external data source such as Nielsen or Qualtrics.

- Data transformation can be performed using tools such as dbt or Trifacta, or can be built manually using a mix of technologies such as Python, Apache Airflow, and similar tools. These tools are mostly used to make data from external sources relevant to each unique business use case.

- Destinations are the repositories in which data is stored once extracted, such as data warehouses or data lakes.

Data pipelines enable you to centralize data from disparate sources into one place for analysis. You can get a more robust view of your customers, create consolidated financial dashboards, and more.

For example, a company’s marketing and commerce stack might include separate platforms such as Facebook Ads, Google Analytics, and Shopify. If a customer experience analyst wants to make sense of these data points to understand the effectiveness of an ad, they need a data pipeline to manage the transfer and normalization of data from these disparate sources into a data warehouse such as Snowflake.

Additionally, data pipelines can feed data from a data warehouse or data lake into operational systems, such as a customer experience processing system like Qualtrics.

Data pipelines can also ensure consistent data quality, which is critical for reliable business intelligence.

Data pipeline architecture

Many companies are modernizing their data infrastructure by adopting cloud-native tools. Automated data pipelines are a key component of this modern data stack and enable businesses to embrace new data sources and improve business intelligence.

The modern data stack consists of:

- An automated data pipeline tool such as Fivetran

- A cloud data destination such as Snowflake, Databricks Lakehouse, BigQuery, or AWS Redshift

- A post-load transformation tool such as dbt (also known as data build tool, by Fishtown Analytics)

- A business intelligence engine such as Looker, Chartio, or Tableau

Data pipelines enable the transfer of data from a source platform to a destination, where the data can be consumed by analysts and data scientists and turned into valuable insights.

Consider the case of running shoe manufacturer ASICS. The company needed to integrate data from NetSuite and Salesforce Marketing Cloud into Snowflake to gain a 360° view of its customers.

To do so, the ASICS data team looked at its core application data —in this case, from the popular app Runkeeper — and combined data on signups for loyalty programs with data from other attribution channels. With a data pipeline, ASICS was able to scale its data integration easily.

There are many variations to the workflow above, depending on the business use case and the destination of choice.

The basic steps of data transfer include:

1: Reading from a source

Sources can include production databases such as MySQL, MongoDB, and PostgresSQL and web applications such as Salesforce and MailChimp. A data pipeline reads from the API endpoint at scheduled intervals.

2: Defining a destination

Destinations might include a cloud data warehouse (Snowflake, Databricks Lakehouse, BigQuery, or Redshift), a data lake, or a business intelligence/dashboarding engine.

3. Transforming data

Data professionals need structured and accessible data that can be interpreted so it makes sense to their business partners. Data transformation enables practitioners to alter data and format to make it relevant and meaningful to their specific business use case.

Data transformation can take many shapes, as in:

- Constructive: adding, copying, or replicating data

- Destructive: deleting fields, records, or columns

- Aesthetic: standardizing salutations, street names, etc. (aka, data cleansing)

Transformations make data well-formed and well-organized — easy for humans and applications to interpret. A data analyst may use a tool such as dbt to standardize, sort, validate and verify the data brought in from the pipeline.

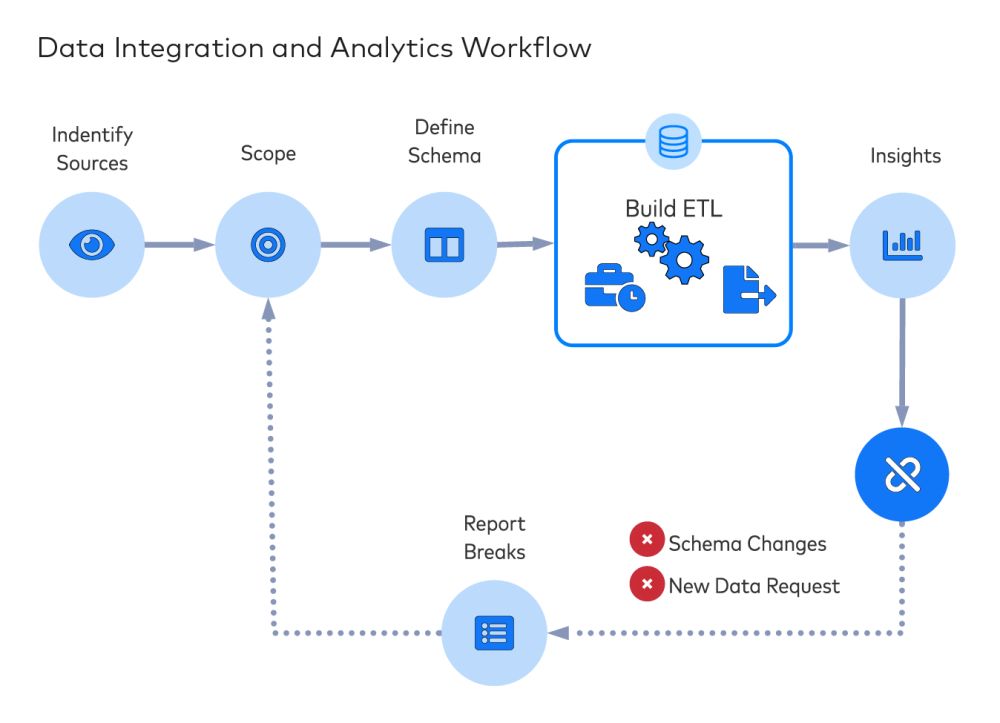

ETL and data pipeline reliability

As with anything in the technology world, things break, data flows included. When your data analytics and business intelligence operations rely on data extracted from various sources, you want your data pipelines to be fast and reliable. But when you’re ingesting external sources such as Stripe, Salesforce, or Shopify, API changes may result in deleted fields and broken data flows.

Moreover, building a data pipeline is often beyond the technical capabilities (or desires) of analysts. It typically necessitates the close involvement of IT and engineering talent, along with bespoke code to extract and transform each source of data. Data pipelines demand maintenance and attention in a manner akin to leaking pipes — pipes down which companies pour money — with little to show in return. And don’t even think about the complexity of building an idempotent data pipeline.

With the rapid growth of cloud-based options and the plummeting cost of cloud-based computation and storage, there is little reason to continue this practice. Today, it’s possible to maintain massive amounts of data in the cloud at a low cost and use a SaaS data pipeline tool to improve and simplify your data analytics.

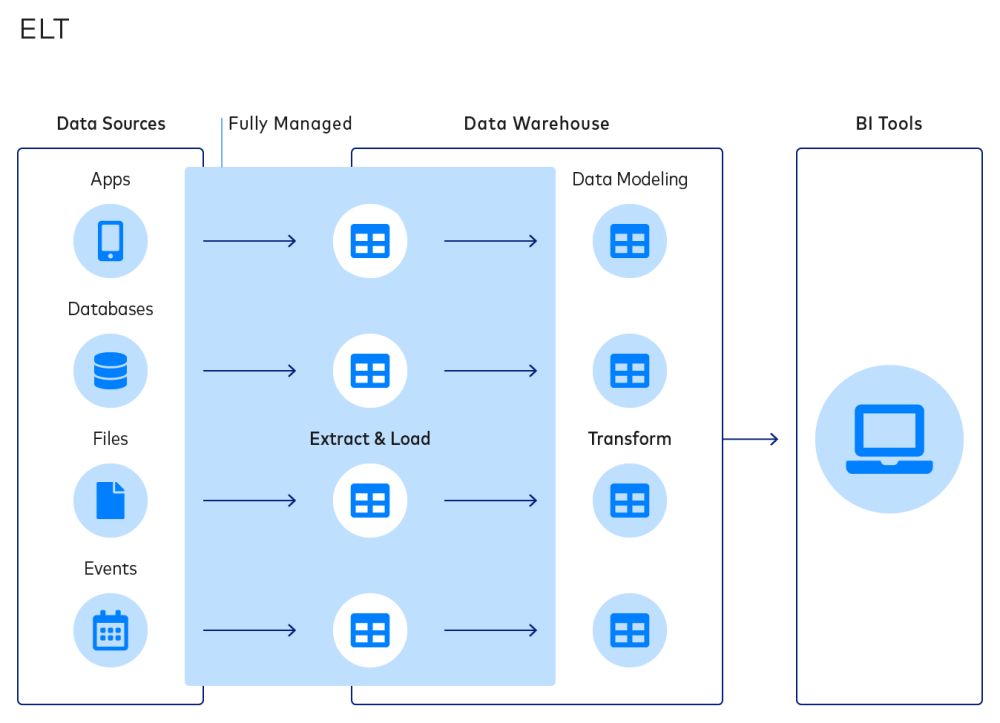

In short, you can now extract and load the data (in the cloud), then transform it as needed for analysis. If you’re considering ETL vs. ELT, ELT is the way to go.

Automated data connectors

Your data engineers can undoubtedly build connectors to extract data from a variety of platforms. But before building data connectors, review our data pipeline build vs. buy considerations. Cost varies across regions and salary scales, but you can make some quick calculations and decide if the effort and risk are worth it.

Data engineers would rather focus on higher-level projects than moving data from point A to point B, not to mention maintaining those “leaky pipes” mentioned above.

Compare the effort of manually building connectors to an automated data pipeline tool. This kind of tool monitors data sources for changes of any kind and can automatically adjust the data integration process without involving developers.

This is why automated data connectors are the most effective way to reduce the programmer burden and enable data analysts and data scientists.

And with data transfer (or data pipelining) handled, data engineers are free to play a more valuable, interesting role: to catalog data for internal stakeholders and be the bridge between analysis and data science.

Why Fivetran

Fivetran automated data connectors are prebuilt and preconfigured and support 700+ data sources, including databases, cloud services, and applications. Fivetran connectors automatically adapt as vendors make changes to schemas by adding or removing columns, changing a data element’s types, or adding new tables. Lastly, our pipelines manage normalization and create ready-to-query data assets for your enterprise that are fault-tolerant and auto-recovering in case of failure. Learn more about our automated data integration solutions.