I was recently challenged to build a custom Fivetran connector that integrates Snowflake Cortex Agent tools into the Fivetran data movement process prior to loading data.

I figured, why not make this an end-to-end industry solution with a custom connector built using the Fivetran connector SDK, Snowflake Cortex Agents, dbt transformation package, and Census data activation in Slack? This comprehensive architecture connects data ingestion, AI, transformation, and data activation in a single workflow, transforming and moving in multiple directions as needed.

Intelligence is more than data

Suppose you are a ranch management operator, managing multiple ranches, and have to monitor the health and safety of your livestock in response to weather changes. Here’s what most weather alert systems give farm and ranch managers:

“Cold front approaching: Low 28°F, winds 15–20 mph.”

By the time someone reads it, identifies which livestock are at risk (especially across multiple ranches), reviews current and historical records, and decides what to do, the weather is already here.

Most farm and ranch operations could benefit from more proactive and actionable data intelligence:

“ALERT: 144 Beef Cattle at FARM_NAME face dangerous conditions. 15 injured animals are highly vulnerable. Historical data shows a 35% risk increase. Actions: Move injured cattle indoors within 6 hours, increase feed 20%, prepare heated water, and stock antibiotics.”

The difference? AI enrichment during data ingestion, automated transformation into actionable views, and delivery to a mobile device for action. Four technologies work together here to create actionable, on-the-fly intelligence: Fivetran, Snowflake Cortex, dbt, and Census.

How this architecture differs from typical data pipelines

My project runs a complete pipeline from a weather API to Slack notification. It is differentiated from typical data pipelines by combining AI enrichment with ingestion, automatically triggering transformations, and operationalising alerts and analytics by sending the intelligence to the device that an operator always carries.

AI enrichment happens during ingestion

The Fivetran custom connector doesn’t just pull weather forecasts from NOAA. It calls a Snowflake Cortex Agent during data movement before anything lands in Snowflake. The agent queries existing livestock health records, correlates weather patterns with historical incidents, and adds five intelligence fields to every forecast period, specifically:

- Livestock risk assessment: Analyzing the risks and health impacts of incoming weather on livestock given their current, specific health status

- Affected farms: Identifying farms at elevated risk based on location and livestock health.

- Species risk matrix: Assessing risks by animal type, i.e., beef cattle face different cold-weather risks than dairy cattle, and injured animals need different protocols than healthy herds

- Recommended actions: Recommending specific preventative measures in order of priority based on historical data on efficacy

- Historical correlations: Relating current circumstances to past weather events, health incidents, and costs.

These fields exist in the source table immediately after sync, and no post-processing pipeline is required. Note that you can also enrich the data once it lands in Snowflake; here, we chose to enrich with AI during ingestion as a proof of concept for Fivetran Connector SDK’s capabilities.

Transformations run automatically.

When Fivetran finishes syncing, it triggers a dbt transformation job to run against my dbt project, executing transformations on any schedule I choose. Within a few seconds, dbt creates five analytical views: high-risk alerts, farm action items, species-specific risks, daily briefings, and executive KPIs. No manual refreshes or job schedules to maintain. Views automatically refresh in Snowflake with the latest data on every sync. The Fivetran sync can run anywhere from every minute to once every 24 hours, and I have this one set to run at 7:00 am CT every day.

Insights go where people work.

Census monitors the dbt views and syncs KPI summaries to Slack on your schedule. Farm and ranch managers receive alerts on their mobile devices (a constant across any industry) at approximately 7:00 AM or immediately after any sync you may want to trigger outside the normal schedule. There’s no logging into dashboards or checking email. The intelligence arrives on the device they always have with them.

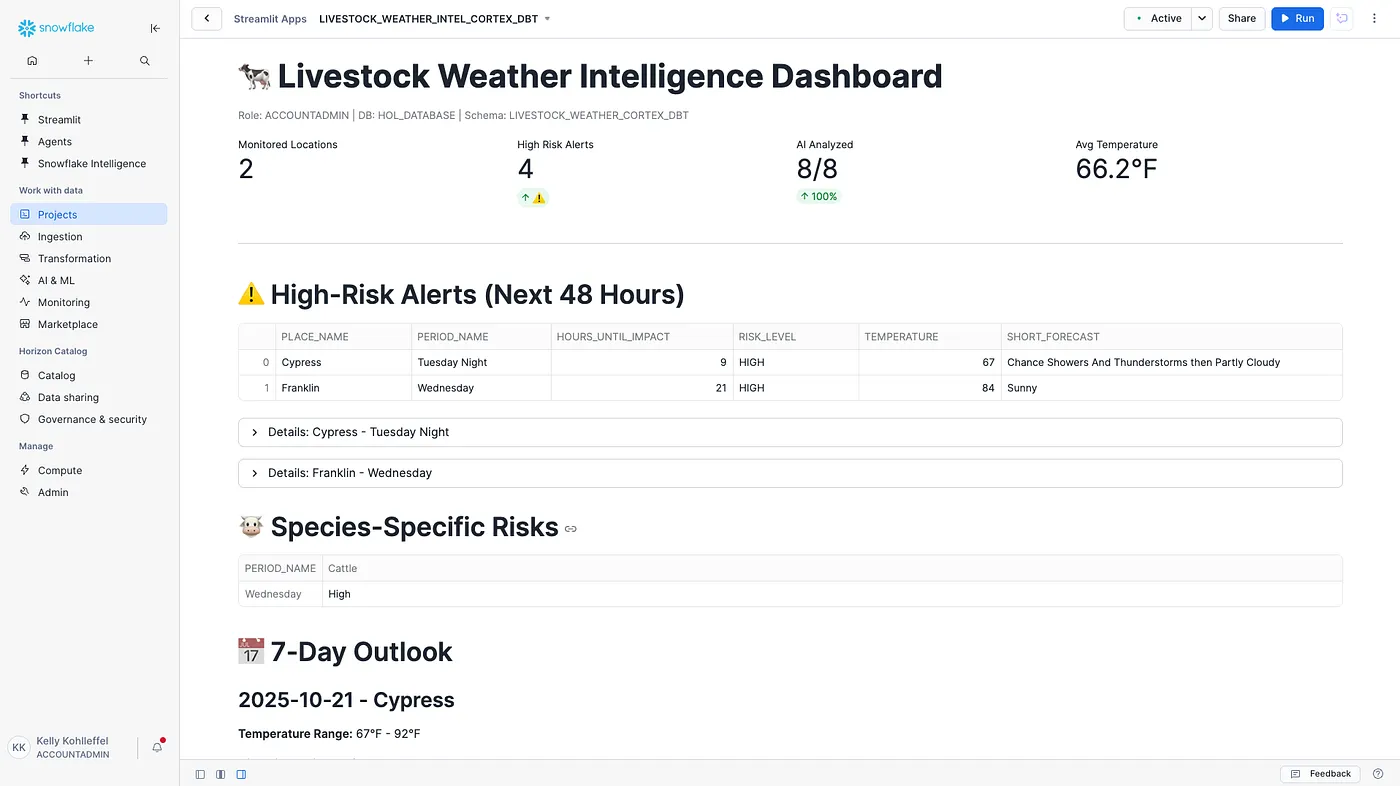

Bonus: Use Streamlit to self-serve analytics

A Streamlit app in Snowflake queries the same dbt views for interactive exploration. Drill into affected farms, compare species risks, and review 7-day outlooks. You never need to leave the Snowflake platform to explore your data.

How the pieces work together

From end to end, the full workflow looks like this:

- Weather API is updated with new meteorological data

- Fivetran custom connector extracts data

- Cortex AI agents read extracted data and enrich it

- Fivetran custom connector loads data into Snowflake

- dbt transforms data in Snowflake using views

- Census moves transformed data points into Slack

- User receives a Slack alert with the latest intelligence

Fivetran does most of the heavy lifting

The custom connector handles weather API pagination, rate limiting, error recovery, and Cortex Agent REST calls. You deploy the connector once, and it runs forever. Fivetran’s orchestrator manages sync timing and automatically triggers dbt after completion.

Snowflake is the intelligence engine

The Cortex Agent enriches weather data during ingestion runs on Snowflake compute. The raw table where Fivetran lands enriched data lives in Snowflake. All processing, storage, and compute services are on a single platform, with no data movement between key services or authentication sprawl. Snowflake Intelligence provides the semantic models and agent framework that turn weather forecasts into livestock risk assessments. Moreover, the Streamlit data app runs inside Snowflake, queries the same dbt views Census uses, and updates automatically.

dbt creates the analytics layer

dbt orchestrated by Fivetran pulls the latest code, runs transformations, and updates views in seconds. You don’t have to copy SQL into Snowflake worksheets or perform any manual view refreshes.

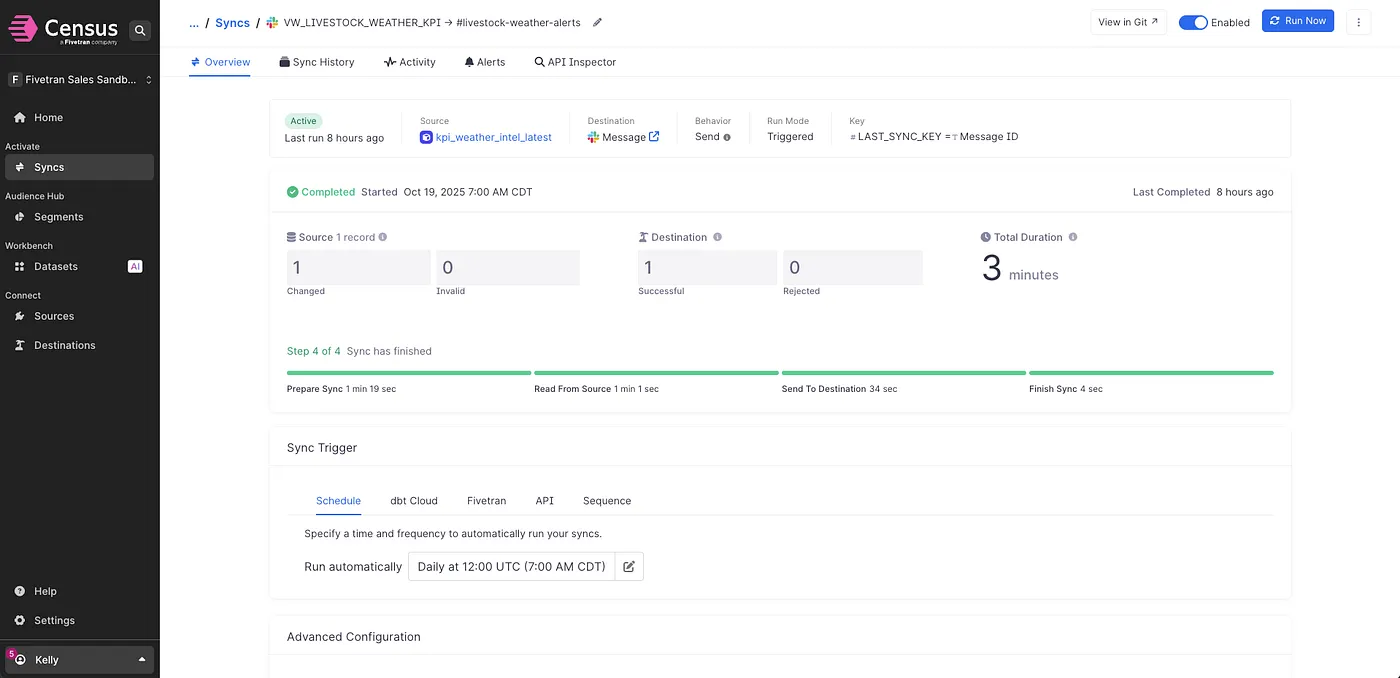

Fivetran Census operationalizes data

Census monitors the _FIVETRAN_SYNCED timestamp to detect fresh data and posts formatted messages to Slack. The same pattern works for any destination Census supports.

Connecting data end-to-end bridges the gap between having data somewhere and ensuring people can actually use it. If insights stay trapped in applications that require training or special tools to use, you haven’t solved as much as you can.

Setup takes an hour

In order of execution, here’s what you need to do to build this architecture:

Prepare Snowflake environment (10 minutes)

- Deploy a Cortex Agent with a semantic model (if not already configured)

- Create a target database for incoming data

- Generate Personal Access Token (PAT) for the Fivetran service

- Grant necessary permissions for dbt and Census roles

Note: If you are building an agent from scratch, allow the extra time required for that build. The Snowflake Agent UI is very straightforward and simple to use. The agent needs a semantic model (used with Cortex Analyst) that understands your livestock health data structure.

Build, debug, and deploy the connector (15 minutes)

- Install Fivetran SDK: pip install fivetran-connector-sdk

- Set up configuration.json with ZIP codes and farm mappings

- Add a Snowflake PAT token for Cortex Agent access

- Run ./debug.sh to test locally

- Run ./deploy.sh to push to Fivetran

- Start the connector sync to Snowflake in the Fivetran UI

Set up dbt transformations (20 minutes)

- Create a GitHub repository with a dbt project structure

- Define five analytical models in models/marts/

- Add deployment.yml for Fivetran integration

- Connect the repository to the Fivetran destination

- Add SSH deploy key to GitHub

- Link dbt transformations to the connector

Configure Census sync (15 minutes)

- Connect Snowflake source and Slack destination

- Create a dataset querying VW_LIVESTOCK_WEATHER_KPI view

- Set up a Slack message template with KPI fields

- Configure sync schedule (daily at 7 AM or based on your required schedule)

- Test with manual trigger

- View in Slack

(Optional) Launch Streamlit data app (10 minutes)

- Open Snowflake Projects > Streamlit Apps

- Set the database and schema context

- Paste the Streamlit app code into the editor

- Run

That’s it. The next Fivetran sync will populate the table, trigger dbt, refresh views, and send a Slack notification.

How it looks live

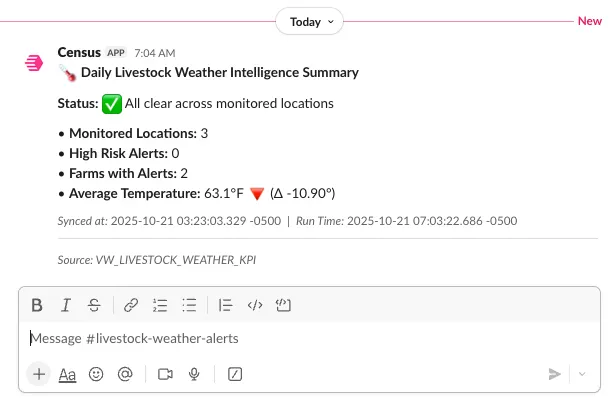

Every morning at 7 AM, you get a Slack message triggered by Census showing:

- Number of monitored locations

- Current high-risk alerts

- Farms requiring attention

- Average temperature and 24-hour trend

You can go to Streamlit for details: which specific farms face elevated risk, which species need priority attention, what actions to take in the next 6–12 hours, and historical context showing similar weather patterns and outcomes.

AI analyzes everything during Fivetran data ingestion, dbt automatically creates actionable views, and Census delivers formatted alerts to Slack. Now, you can make decisions in minutes instead of hours. Operations staff see alerts on mobile devices and respond immediately.

Without this workflow, you would have to manually check current livestock health records, consult historical spreadsheets, draft action plans, and send emails after every weather alert; 2–4 hours of work, repeated across multiple locations.

Bonus: Combine this with MCP for programmatic control

Census triggers automatically after Fivetran syncs, but what if the existing schedule doesn’t align with your actual needs? Maybe you want a midday update, but Fivetran is set to run at 7 AM. You could also control the timing using Model Context Protocol (MCP).

Based on the schedule you choose, a simple Python script sends a Slack notification asking: “Would you like to check and refresh if needed?” with two buttons: yes or skip. Click Yes, and the agent provides text to paste into Claude Desktop, which orchestrates everything through local MCP servers. Claude checks Snowflake for data age, triggers a Fivetran sync if the data is stale, runs dbt to rebuild the views, and confirms the data’s freshness, all in about 5 minutes.

Why this pattern matters

This project demonstrates what is possible when ingestion, intelligence, transformation, and activation operate as a single workflow rather than separate projects. This pipeline uses four technologies that do exactly what they’re designed to do:

- Fivetran — Get high-quality, trusted, usable data into Snowflake, enriched and ready to use. Not just data movement — intelligent ingestion that adds value during extraction.

- Snowflake Cortex — Apply agentic AI to data without moving it to external services. Agent calls happen during Fivetran sync, but either option works.

- dbt — Transform data into analytics views automatically. Any changes to the dataset and the intelligence produced are reflected in production views within seconds, triggered by Fivetran after every sync.

- Census — Makes data operational. Query views, detect freshness, and push to Slack. One sync definition handles both daily summaries and emergency alerts.

The livestock monitoring use case is specific, but the pattern applies everywhere: financial alerts, equipment maintenance, customer health scores, inventory optimization, and compliance monitoring. Anywhere you have external data that needs intelligence before (or after) it lands, there is automatic transformation into actionable views and delivery to operational systems. You can prototype these workflows in a morning or afternoon and explore what’s possible when data movement, AI, transformation, and activation align.

If your team wants to stop fighting complex data stacks and start delivering AI outcomes quickly, reach out. We’ll spend an hour together, walk through your use case, and show you how Fivetran, Snowflake, dbt, and Census turn raw data into operational intelligence with no manual ETL required.

[CTA_MODULE]