Healthcare teams are building AI workflows. Most of them are discovering their data pipelines weren't designed for this. That’s why I sat down with Murali Gandhirajan, Global Healthcare & Lifesciences CTO at Snowflake, to discuss how AI success starts with data: how quickly you can move it, how reliably you can govern it, and how easily you can put it to work.

[CTA_MODULE]

AI workflows need more than analytics-ready data

Traditional data pipelines were built for dashboards and reports. AI workflows need something different — faster, more reliable access to structured, semi-structured, and unstructured data, all governed in one place. Snowflake provides that foundation, supporting multimodal data such as claims, EHR records, FHIR JSON, clinical notes, and call transcripts, enabling teams to apply AI directly through built-in services in Snowflake Cortex, such as Snowflake Intelligence.

Fivetran complements this by reducing upstream friction. Instead of building and maintaining custom pipelines, teams use Fivetran to continuously and securely move data from source systems into Snowflake with built-in change data capture, automatic schema handling, and no manual orchestration. Data arrives standardized and ready for analytics or AI, with no ongoing maintenance required.

“It's about having access to the data, and then, once you have access to the data, it is about the speed to the business impact.”

— Murali Gandhirajan, Global Healthcare & Lifesciences CTO at Snowflake

Together, Fivetran and Snowflake remove the infrastructure work that keeps teams from shipping AI use cases. Since both platforms are fully managed, teams avoid the usual infrastructure tax:

- Provisioning and managing infrastructure

- Writing and maintaining ingestion code

- Handling retries, failures, or schema drift

With Snowflake and Fivetran, production pipelines can typically be created in minutes, enabling AI agents in Snowflake Cortex to generate actionable insights almost immediately after data becomes available.

Reference architecture

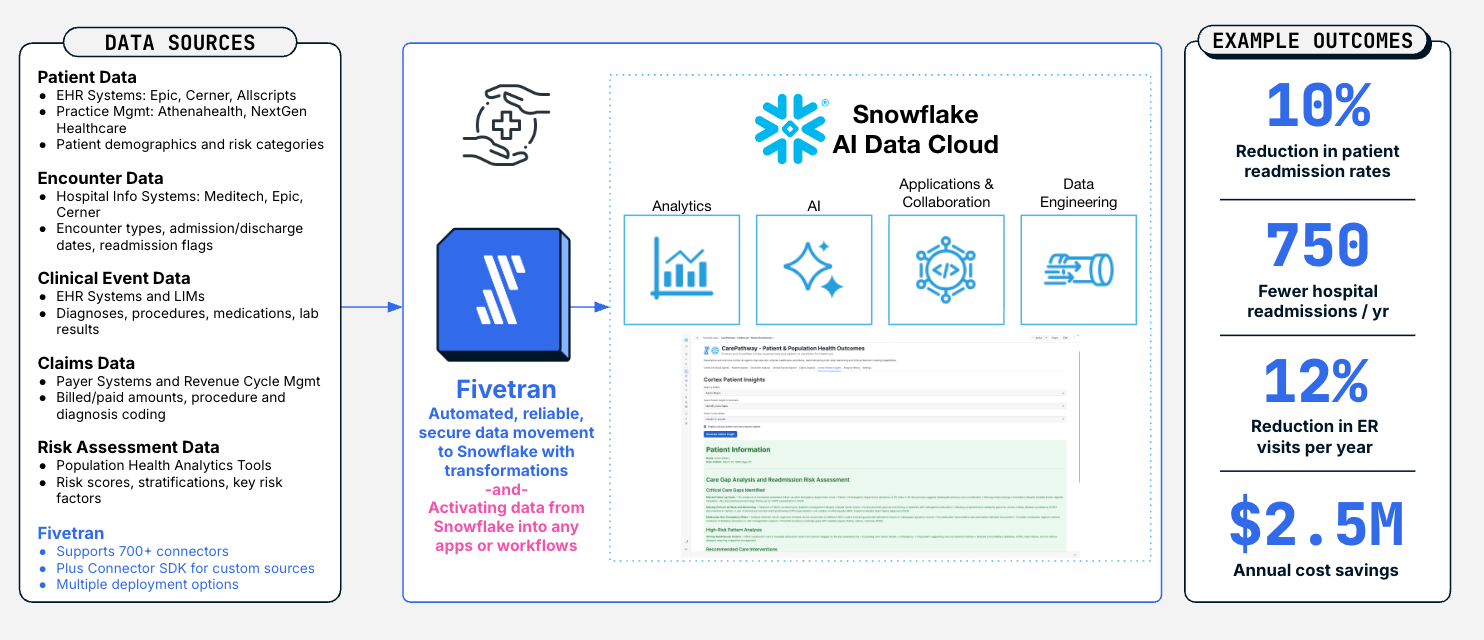

At a high level, the joint architecture looks like this:

With this architecture, teams can iterate on AI use cases rather than on pipeline maintenance. Data engineers shift from building connectors to building intelligence. Business stakeholders receive insights in days rather than quarters.

How to get started: A practical walkthrough

Step 1: Start small with your idea or vision

Don’t overscope:

- Focus on a single, high-value use case

- Use trusted datasets

- Focus on delivering results in a sprint, not months

Step 2: Set up the foundation to support the use case

- Set up Snowflake as your unified data and AI layer: Create or use an existing Snowflake account. Once data lands in Snowflake (via Fivetran), it’s immediately accessible for analytics, transformations, and AI workloads, all on the same platform and security model.

- Connect source systems with Fivetran: Using Fivetran, create a connector for your source systems, for example, Postgres, Epic, or another healthcare data source. Once configured, Fivetran continuously replicates data into Snowflake with reliability guarantees and minimal operational overhead.

- Prepare data inside Snowflake: Snowflake treats semi-structured formats (JSON, FHIR, XML) as first-class objects, allowing you to query them directly using SQL dot notation with no preprocessing required. Fivetran also offers a range of data transformation options to help you move from raw data to AI-ready data.

- Apply AI using Snowflake Cortex Agents: With foundational data in place, teams can deploy Cortex Agents that orchestrate across both structured and unstructured data sources. These agents don't just answer questions; they plan multi-step workflows, determine which tools to use (Cortex Analyst for SQL generation and Cortex Search for document retrieval), and review results to improve accuracy. For example, an agent can analyze patient risk scores in structured tables, search through unstructured clinical notes for context, and combine both into actionable care recommendations, all within Snowflake's governance boundary. This orchestration occurs through natural language queries, with the agent autonomously breaking down complex requests into subtasks and routing them to the appropriate services.

- Multi-step reasoning: Agents break complex questions into subtasks and determine the sequence of operations needed.

- Unified data access: Query structured data via Cortex Analyst and search unstructured documents via Cortex Search in a single workflow.

- Autonomous tool selection: Agents automatically choose whether to generate SQL, search documents, or call custom tools based on the query.

- Reflection and iteration: After each step, agents evaluate results and determine next actions — whether that's refining the query, gathering more data, or delivering the final answer.

In the webinar demo, we showed agents analyzing patient history in structured tables and correlating risk signals across sources to quickly produce care gap recommendations, all without exporting data or managing external APIs.

Step 3: Ship one use case and iterate

Once your data is flowing and AI workflows are in place, resist the urge to expand too quickly. Instead, ship a single, focused use case into production and validate its impact with real users.

Start by operationalizing one outcome, for example, identifying high-risk patients, prioritizing accounts, or surfacing care gaps, and make it available where decisions are made. Use feedback from business users to refine logic, improve prompts, and adjust datasets, rather than redesigning the architecture.

Because Snowflake and Fivetran abstract away infrastructure, data movement, and reliability, iteration becomes faster and lower-risk. Teams can add new data sources, enrich existing models, or extend AI agents to adjacent use cases without rebuilding pipelines or increasing operational overhead. This incremental approach allows organizations to move from proof of concept to measurable value quickly and then scale with confidence.

Real-world results: Sharp HealthCare powers AI with Fivetran and Snowflake

Sharp HealthCare serves more than 1 million patients annually across 7 hospitals, relying on timely, accurate data to support clinical operations, workforce planning, and patient care. However, critical data lived across siloed on-premise systems, SaaS tools, and unstructured sources, forcing the engineering team to spend much of its time maintaining fragile, custom pipelines instead of enabling innovation.

To break this cycle, Sharp adopted Fivetran to automate data ingestion into Snowflake, creating a centralized, governed data platform that supports advanced analytics and AI. With Fivetran’s Epic connector, Sharp now replicates data directly into Snowflake automatically, securely, and in real time.

As a result, in just 90 days, Sharp saved nearly $287,000 and unlocked faster reporting and improved clinical insights. More importantly, they laid the groundwork for Sharp’s internal AI initiatives, including AI-based applications and a custom GPT implementation that enables staff to access policies and insights in seconds, delivering outcomes previously not possible.

By pairing Fivetran’s automated data movement with Snowflake’s unified data and AI platform, Sharp has transformed its data operations from a maintenance burden into an AI-ready engine enabling smarter decisions, faster innovation, and more responsive, patient-centered care.

[CTA_MODULE]